In the last decade, data professionals have mastered the art of capturing, storing, and analyzing structured data. We built warehouses, lakes, and mesh architectures to tame rows and columns. Today, we face a paradigm shift. We are no longer just managing static records; we are managing intelligence.

Large Language Models (LLMs) represent a fundamental change in how we process information. They allow us to interact with unstructured data—text, code, and reasoning—with the same rigor we once applied to SQL queries. For the Data Architect or Engineer, an LLM is not magic; it is a probabilistic engine that can be optimized, tuned, and orchestrated.

The Thesis: Before you can architect for AI, you must understand the raw material. Data is no longer static; it is fluid language.

🎯 Core Concepts

🧠 The Large Language Model (LLM) as a “Reasoning Engine”

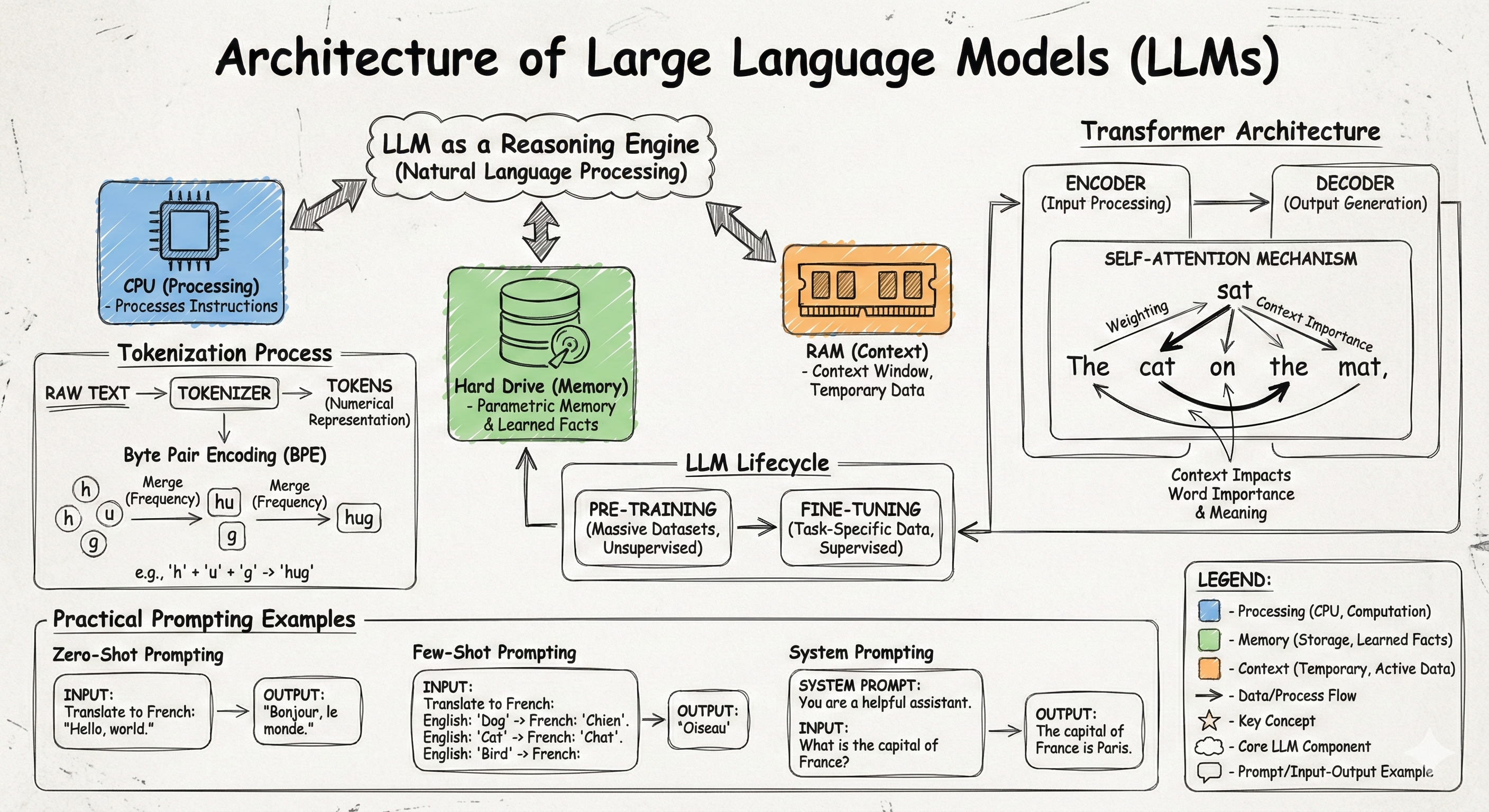

At its simplest, an LLM is a function that maps text to text, but practically, it functions as a Reasoning Engine.

Unlike a database that retrieves exact matches, an LLM retrieves concepts and relationships learned during training. It uses these relationships to “reason” through a problem.

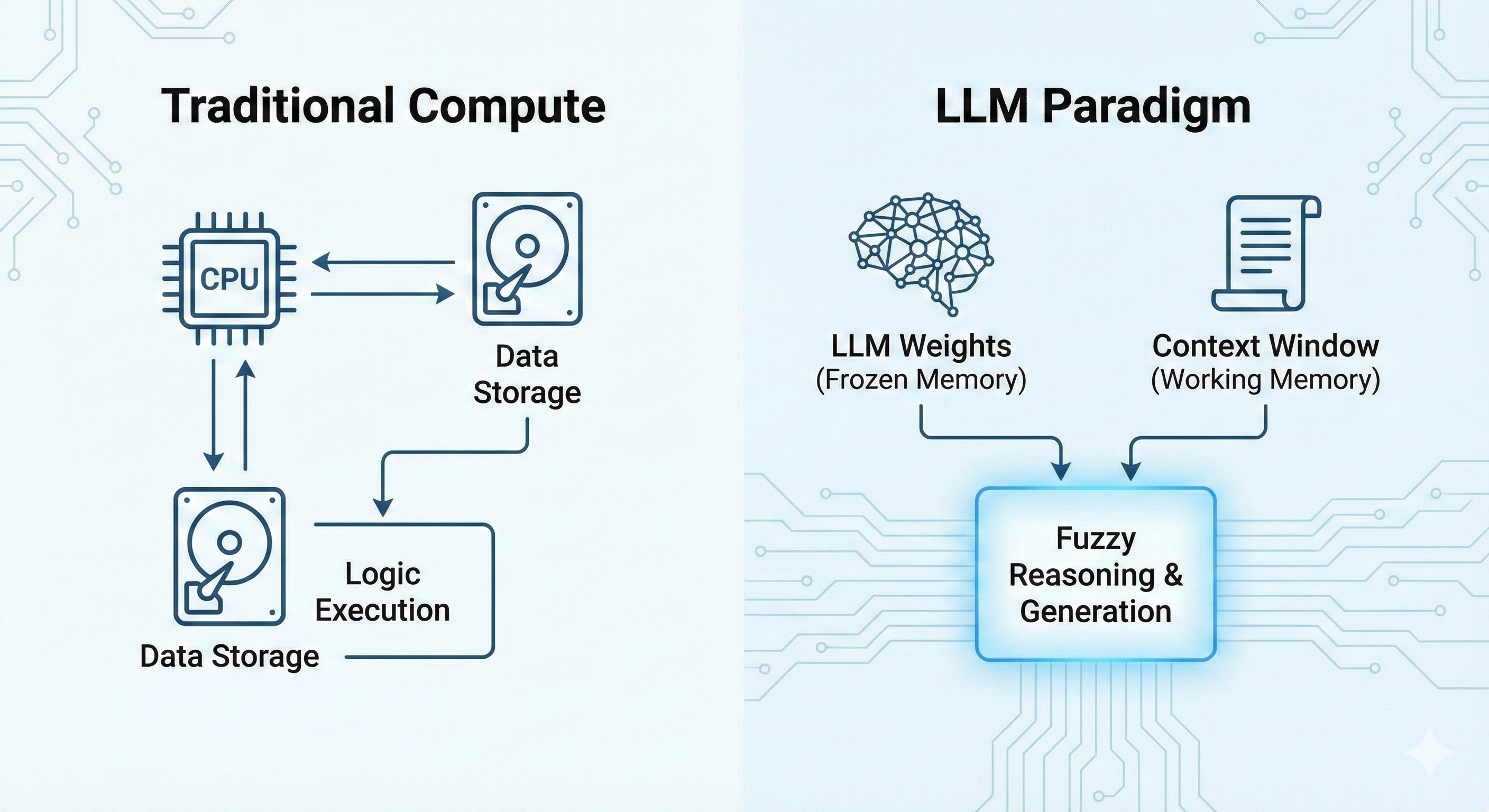

Analogy: The Cognitive Architecture Think of an LLM as a hybrid of traditional computing components, but “fuzzy”:

- 🖥️ CPU (Processing): The LLM acts as a CPU that processes natural language instructions.

- 💾 Hard Drive (Memory): The LLM contains “parametric memory” (facts learned during training).

- ⚡ RAM (Context): The “Context Window” is the temporary workspace where you load specific data for the model to process right now.

🔢 Tokenization and Model Mechanics

Tokenization is the process of converting text into a sequence of integers that the model can ingest. Modern LLMs use Byte Pair Encoding (BPE), an algorithm that iteratively merges the most frequent pair of bytes (or characters) to create a vocabulary. Common words might be single tokens, while complex words are broken down.

Key Mechanics for Data Pros:

- 🔄 Autoregressive Generation: GPT-style models are “decoder-only.” They predict the next token based strictly on the sequence of previous tokens.

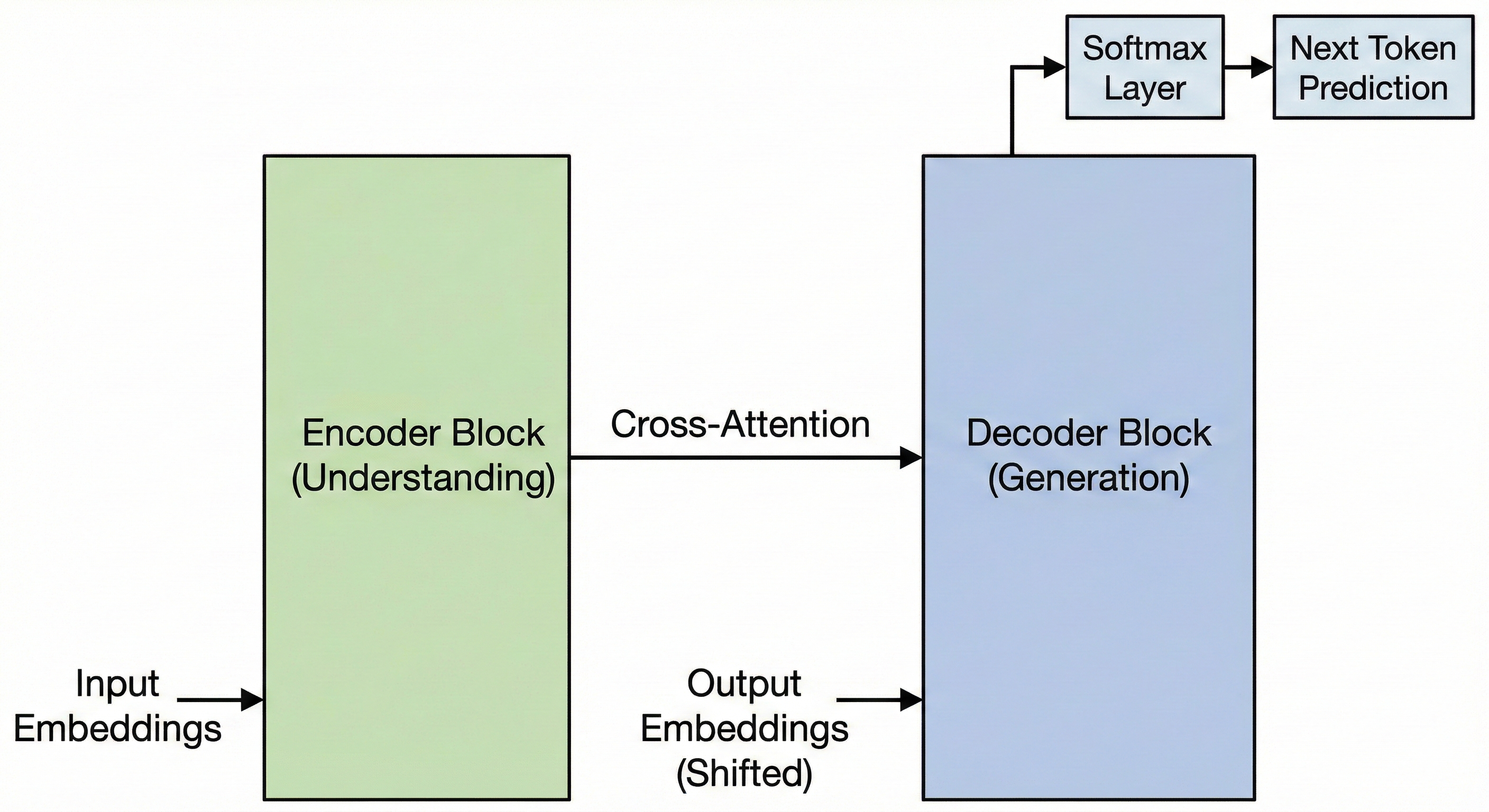

- 🔀 Encoder-Decoder: Architectures like T5 or the original Transformer use an Encoder (to understand input) and a Decoder (to generate output). Most Generative AI today focuses on Decoder-only models.

- 📦 Quantization: To run these massive models efficiently, we reduce the precision of their weights (e.g., from 16-bit floating point to 4-bit integers), significantly lowering memory usage with minimal accuracy loss.

Practical Example: BPE in Python

# Conceptual example of how tokenization works using OpenAI's tiktoken library

import tiktoken

# Load the encoding for GPT-4

enc = tiktoken.encoding_for_model("gpt-4")

text = "Data Architecture is evolving."

tokens = enc.encode(text)

print(f"Original Text: {text}")

print(f"Token IDs: {tokens}")

print(f"Token Count: {len(tokens)}")

# Output Explanation:

# "Data" -> 2366

# " Architecture" -> 14435

# " is" -> 374

# " evolving" -> 18361

# "." -> 13💰 Impact on Cost and Context: Billing and memory are defined by tokens. A standard approximation is 1,000 tokens ≈ 750 words.

If you process 1 million documents, and each document is 500 words, you aren't paying for 500 million words; you are paying for ≈ 666 million tokens. This conversion factor is critical for budget estimation.

🏗️ The Transformer Architecture

Introduced by Google in “Attention Is All You Need” (2017), the Transformer replaced RNNs and LSTMs. It allowed models to process entire sequences of data in parallel (perfect for GPUs) rather than sequentially.

- 📥 Encoder: Focuses on building a rich representation of the input (great for classification, semantic search).

- 📤 Decoder: Focuses on generating the next step (great for text generation, chat).

🧐 The Self-Attention Mechanism

Consider the word “Bank”:

- 💵 “The money is in the bank.” (Context: Financial)

- 🏞️ “The boat is on the river bank.” (Context: Geography)

In a Transformer, the vector representation of “bank” is updated dynamically based on the surrounding words (“money” vs. “river”). This allows LLMs to capture nuance, sarcasm, and code syntax effectively.

📚 Learning Resources

📖 Must-Read Material

- 📘 “The Illustrated Transformer” by Jay Alammar: This is widely considered the best visual explanation of the architecture. It breaks down the matrix multiplications of the Attention mechanism into easy-to-digest animations.

🎯 Coursework

- 🎓 DeepLearning.AI - Generative AI with Large Language Models:

- Focus: Pay specific attention to the LLM Lifecycle module. It explains the critical difference between Pre-training (teaching the model English/Code) and Fine-tuning (teaching the model to follow instructions or behave like a specific persona).

🧪 Hands-On Lab: The Prompt Engineering Sandbox

🛠️ Tools: Open the OpenAI Playground or Anthropic Console. Ensure you are in “Chat” mode.

🎯 Experiment 1: Zero-Shot Prompting

- 💬 Prompt: “Classify the following support ticket into ‘Urgent’, ‘General’, or ‘Spam’: ‘I cannot access the production database and the ETL pipeline is halted.’”

- ✅ Result: The model relies solely on its pre-trained knowledge of what “Urgent” means in an IT context.

🎯 Experiment 2: Few-Shot Prompting

- 💬 Prompt:

Classify these tickets:

Ticket: "Can I update my profile picture?"

Category: General

Ticket: "Buy cheap meds now!"

Category: Spam

Ticket: "The server is returning 500 errors on the checkout page."

Category: Urgent

Ticket: "The data export is taking longer than usual."

Category:- 📊 Analysis: The model will likely output “General” or “Urgent” (depending on your specific definition) with much higher confidence because it mimics the pattern you provided.

🎯 Experiment 3: System Prompting

- ⚙️ System Instruction: “You are a Tier 3 SQL Database Administrator. You answer concisely and always provide optimization tips.”

- 💬 User Input: “How do I delete duplicates?”

- ✅ Result: Instead of a generic explanation, the model will likely provide a CTE-based or window function solution (

ROW_NUMBER()) specific to high-performance SQL, adhering to the persona.

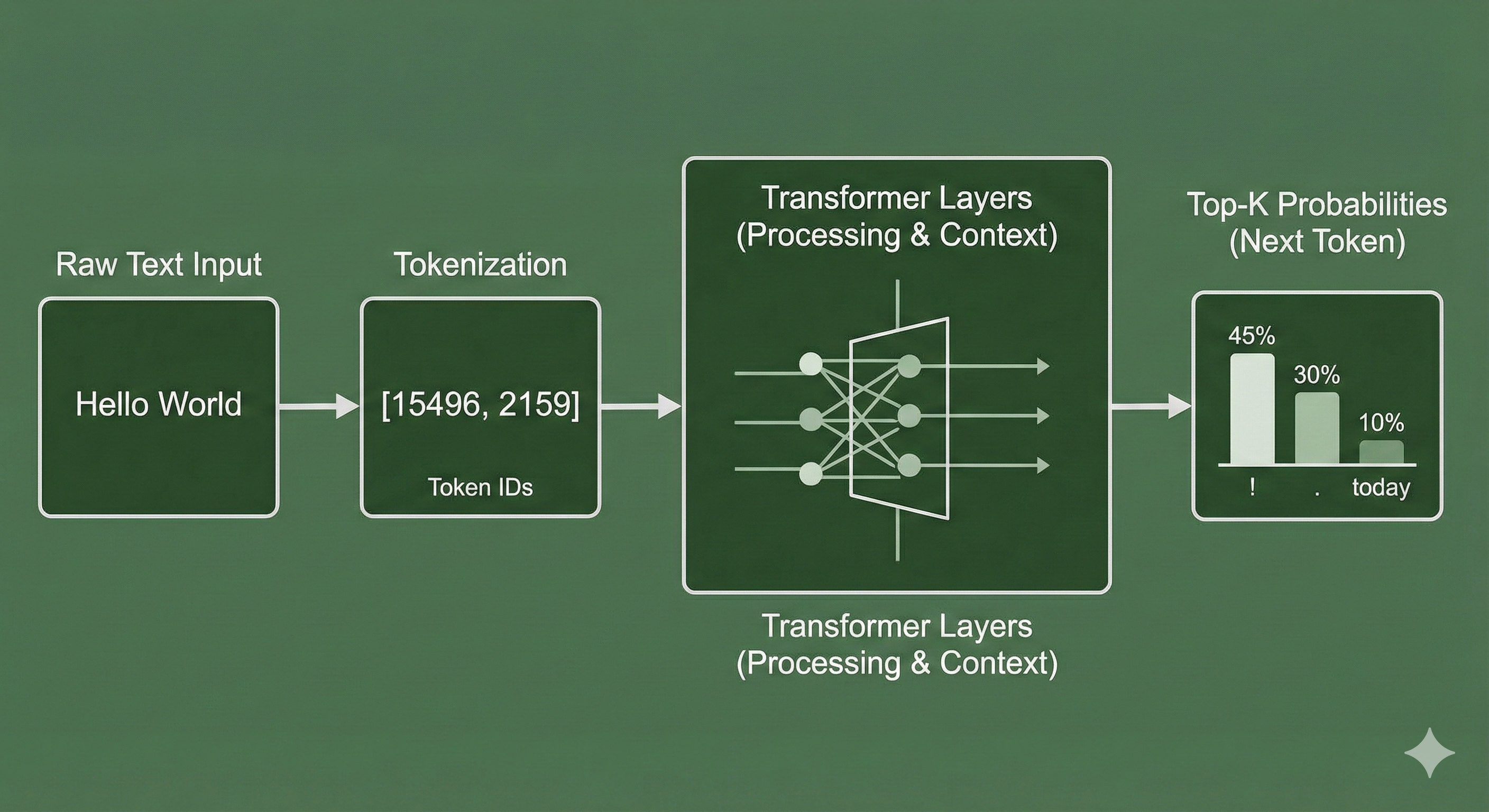

➡️ Visual Representation: The Token Flow

- 📥 Input: The user types “Hello World”.

- 🔢 Integer Conversion: The tokenizer converts this to

[15496, 2159]. - ⚙️ Processing: The model passes these integers through layers of attention mechanisms to understand the greeting context.

- 🎲 Prediction: The model calculates the probability of every token in its vocabulary being the next token.

- ✨ Selection: It selects ”!” (or ”.”) based on the highest probability (or sampling settings), completing the phrase: “Hello World!”

Conclusion for the Data Architect 🚀

Understanding LLMs is not about abandoning your existing skills; it is about extending them. Just as you learned to index a database to optimize retrieval, you must now learn to prompt and fine-tune LLMs to optimize reasoning. The raw material is language, but the discipline of engineering remains the same.

💬 What aspect of LLMs do you find most challenging as a data professional? Let’s discuss in the comments or connect with me on LinkedIn.