💀 Is Traditional Data Architecture Dying?

A deep, architectural walkthrough of what actually breaks — and what replaces it — in the GenAI era

📌 TL;DR

Traditional data architecture is not obsolete.

But it was designed to serve humans reading data, not machines reasoning with context.

This post goes one level deeper than most GenAI blogs.

We’ll define concepts carefully, explain why they exist, and show how they change real-world architectures.

Take your time with this one.

🧠 Section 1: What “Traditional Data Architecture” Actually Means

Before we say something is “dying”, we need to be precise.

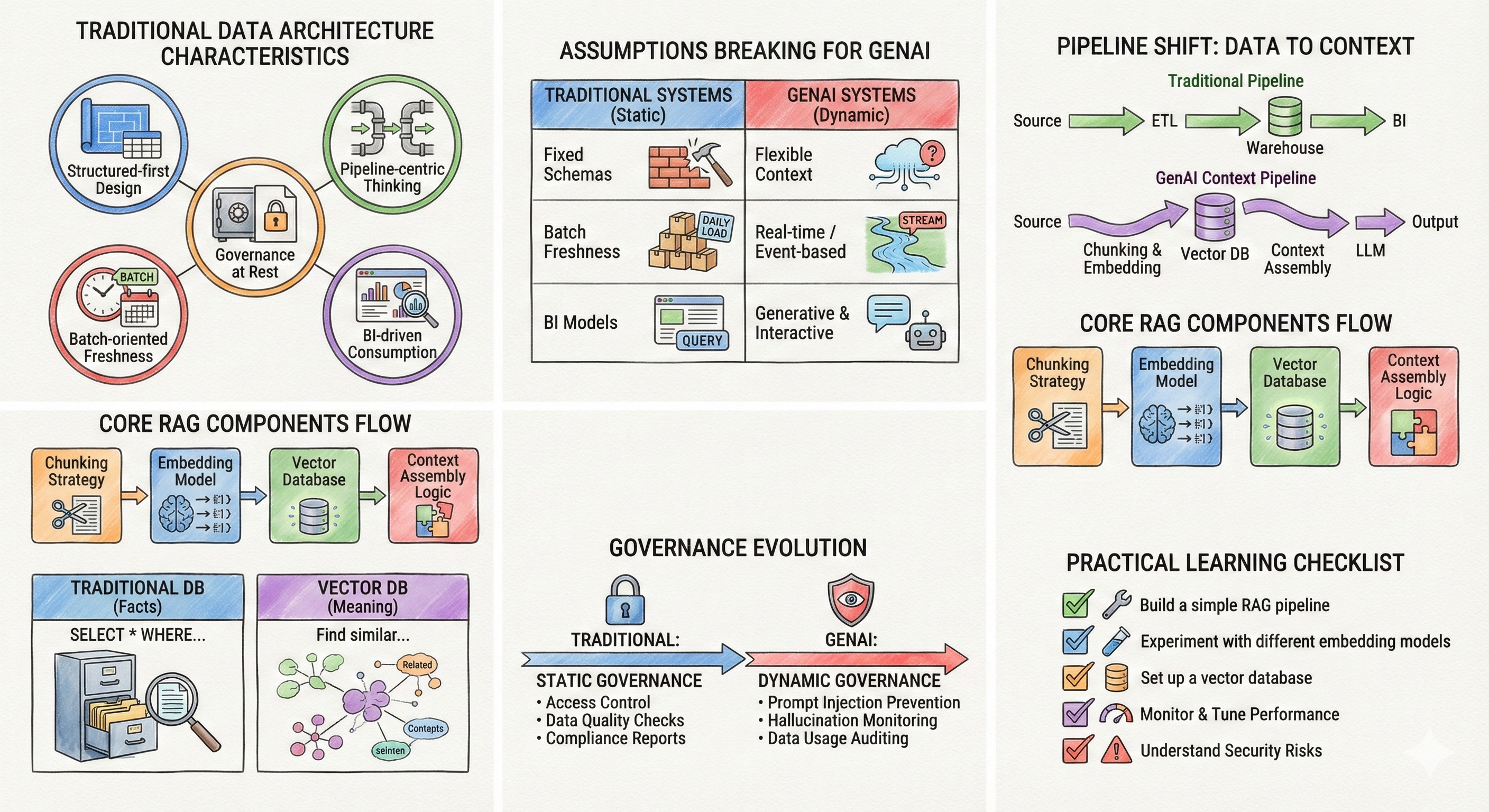

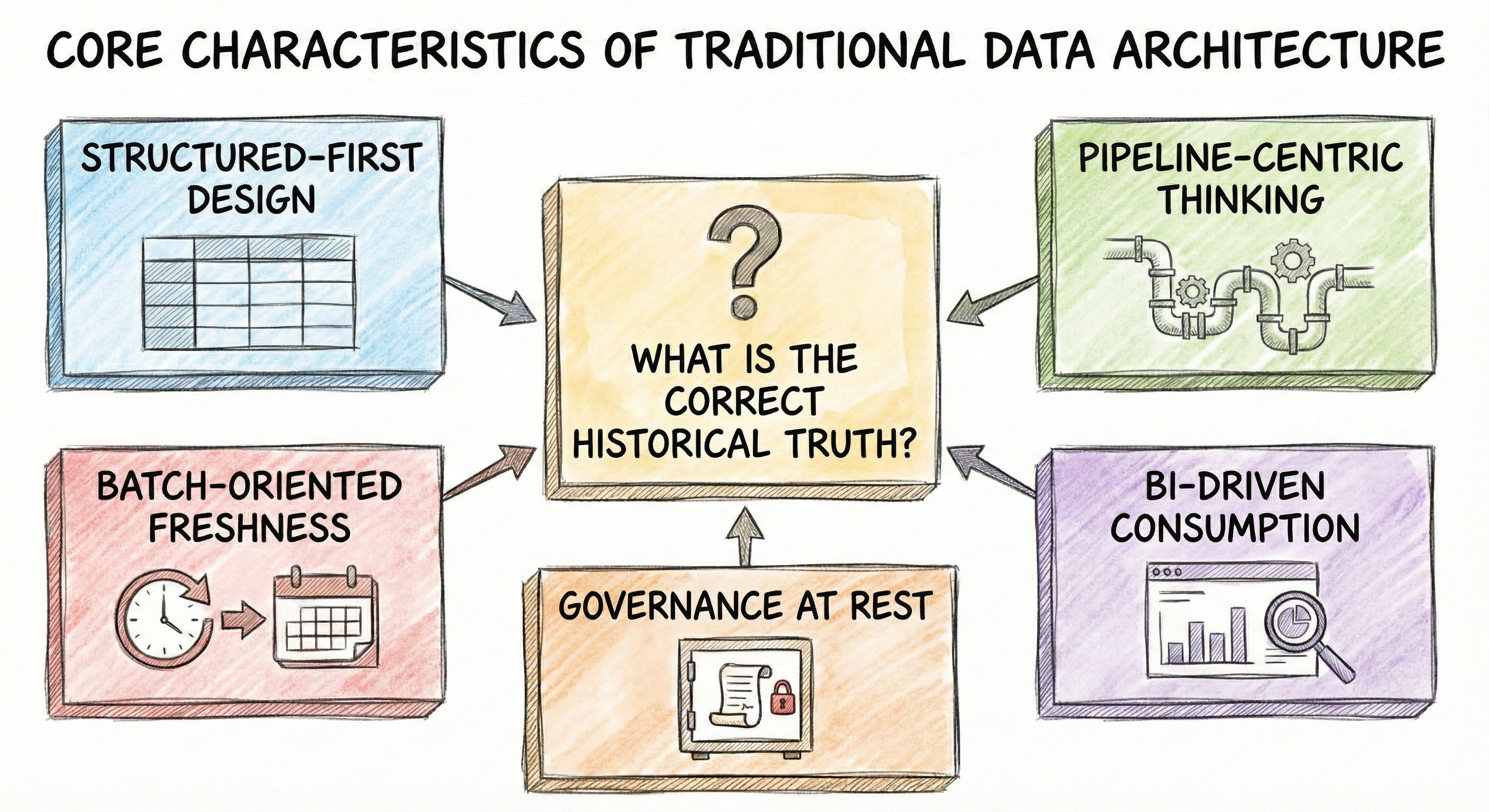

When most enterprises talk about data architecture, they usually mean:

Core Characteristics

1. Structured-first design

- Tables, columns, schemas

- Clearly typed attributes (INT, STRING, DATE)

2. Pipeline-centric thinking

- ETL / ELT pipelines move data forward

- Data quality is enforced before consumption

3. Batch-oriented freshness

- Hourly / daily loads are acceptable

- Humans tolerate delay

4. BI-driven consumption

- Dashboards, reports, ad-hoc SQL

5. Governance at rest

- Masking, encryption, RBAC applied post-load

This architecture evolved to answer one core question:

“What is the correct historical truth?”

And it did that job extremely well.

🧱 Section 2: Why These Assumptions Break for GenAI

GenAI systems don’t query data.

They reason over context.

That single difference breaks multiple assumptions at once.

Let’s break this down carefully.

❌ Assumption 1: Fixed Schemas Are the Primary Interface

In traditional systems:

- Schema = contract

- Breaking schema = breaking downstream consumers

In GenAI systems:

- The model doesn’t “see” schemas

- It sees natural language tokens

Example:

A PDF policy document has:

- Headings

- Paragraphs

- Footnotes

For an LLM, this is raw reasoning material — not an unmodeled problem.

📌 Architectural implication:

Schema moves from being a storage concern to a retrieval concern.

❌ Assumption 2: Batch Freshness Is Good Enough

Humans accept answers like:

- “Data is from yesterday”

AI agents cannot.

Why?

- AI responses often trigger actions

- Stale context = wrong action

Example:

- A support copilot suggesting outdated troubleshooting steps

📌 Architectural implication:

Freshness becomes contextual, not global.

Some documents need real-time updates. Others don’t.

❌ Assumption 3: BI Models Represent Business Reality

BI models optimize for:

- Aggregation

- Performance

- Slice & dice

LLMs optimize for:

- Explanation

- Reasoning

- Natural language synthesis

A star schema is great for a dashboard.

It’s almost useless for grounding an LLM.

📌 Architectural implication:

Analytical models and reasoning context must diverge.

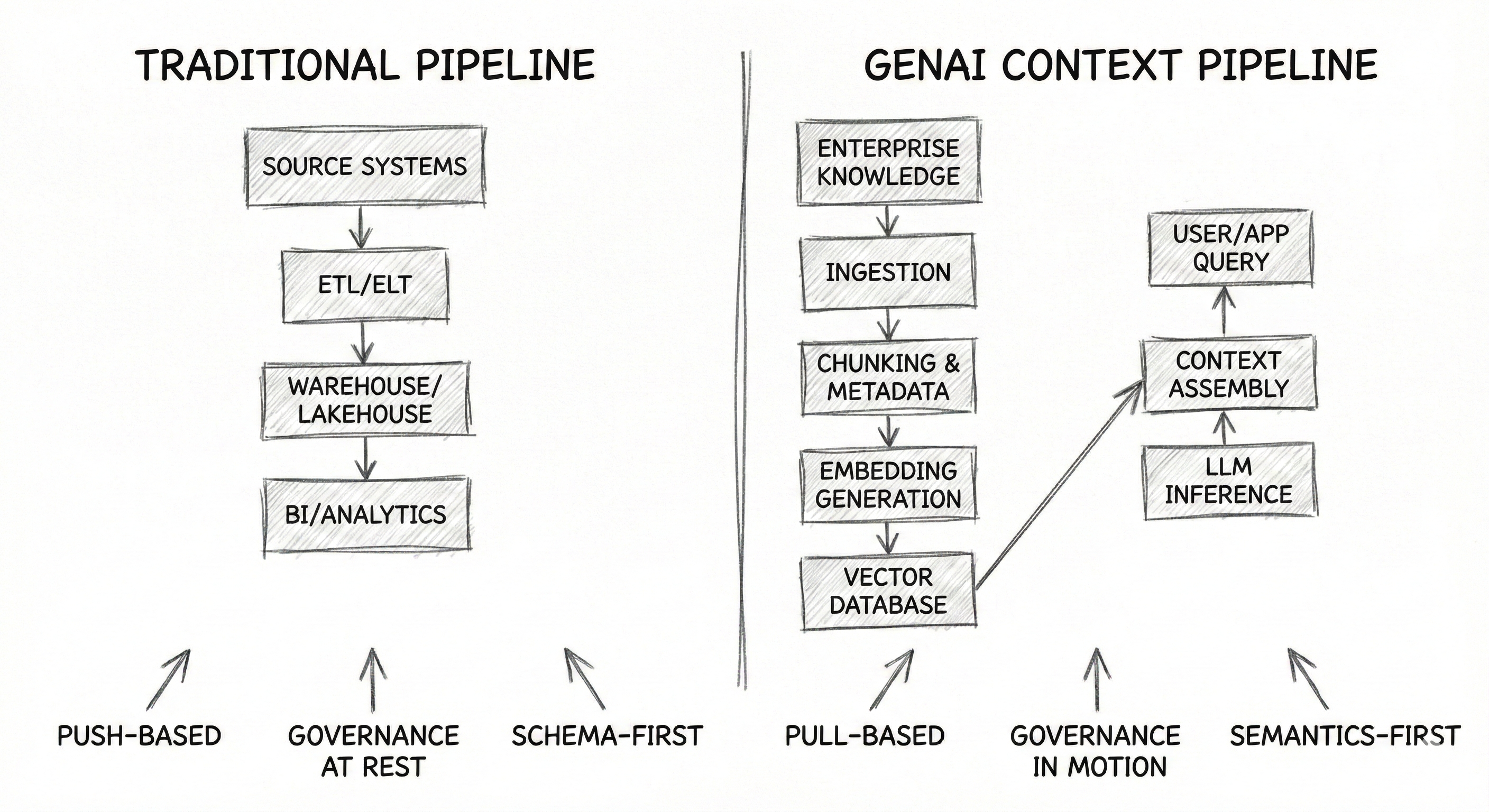

🔄 Section 3: The Shift from Data Pipelines to Context Pipelines

This is the most important mental model shift.

Traditional Pipeline (Simplified)

Source Systems → ETL/ELT → Warehouse/Lakehouse → BI/AnalyticsData flows forward.

Consumption is passive.

GenAI Context Pipeline

Enterprise Knowledge (PDFs, Emails, DBs)

↓

Ingestion

↓

Chunking & Metadata

↓

Embedding Generation

↓

Vector Database

↑

User/App Query → Query Understanding → Vector Search

↓

Context Assembly (with Security Trimming, Policy Filters, Freshness Rules)

↓

LLM Inference

Key Differences

| Traditional | GenAI |

|---|---|

| Push-based | Pull-based |

| Pre-aggregated | Dynamically assembled |

| Schema-first | Semantics-first |

| Governance after load | Governance during retrieval |

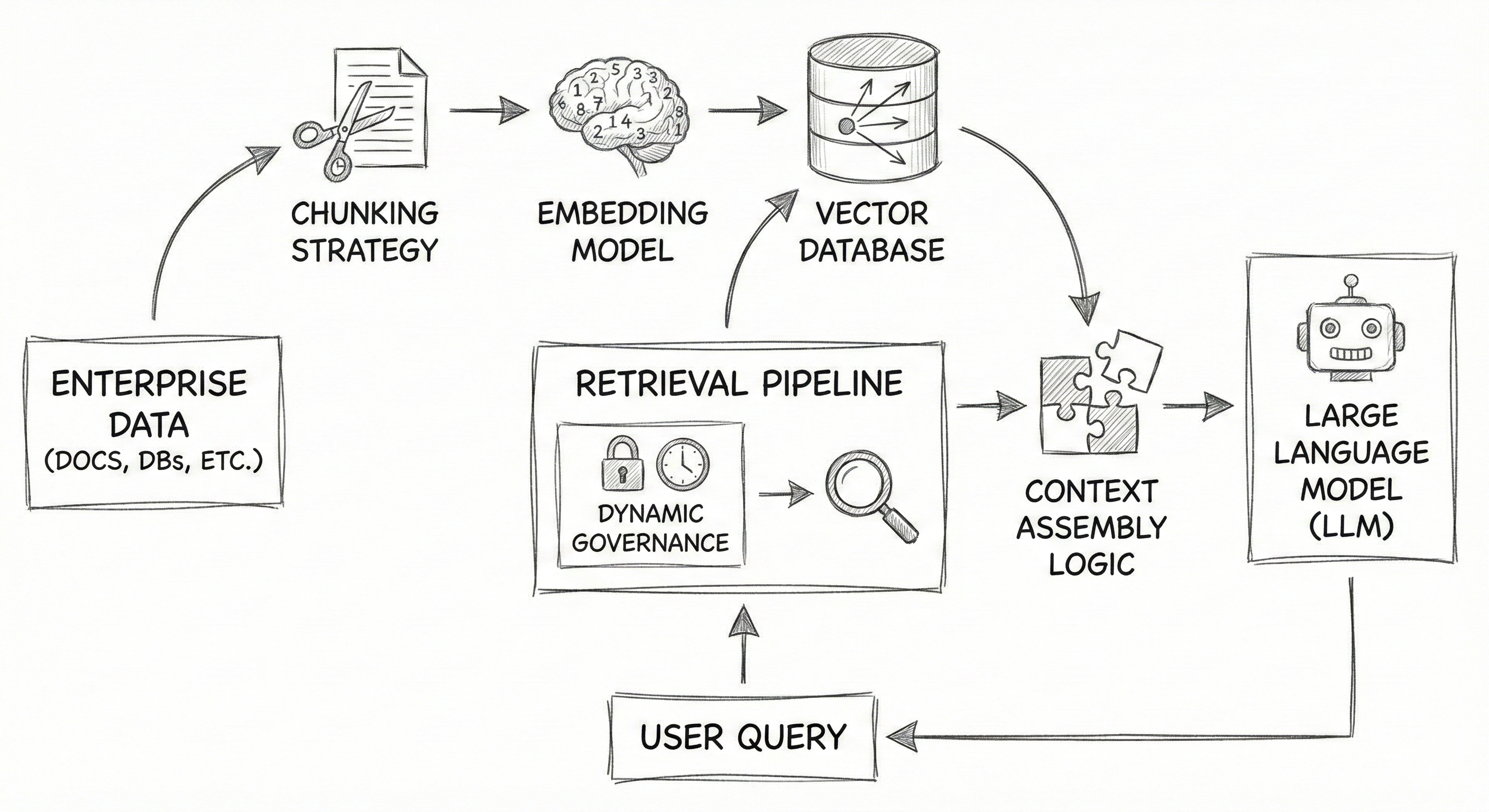

🧬 Section 4: Understanding RAG (Without the Hype)

RAG = Retrieval-Augmented Generation

Let’s strip it down.

RAG simply means:

“Before the model answers, retrieve relevant enterprise context and inject it into the prompt.”

Why This Matters Architecturally

- Models are frozen (no retraining per customer)

- Data changes constantly

- Retrieval bridges that gap

Core RAG Components

1. Chunking strategy

- How big is a piece of knowledge?

2. Embedding model

- How meaning is represented numerically

3. Vector database

- Semantic retrieval engine

4. Context assembly logic

- How much context is enough?

These are data architecture decisions, not AI ones.

🧠 Section 5: Why Vector Databases Are Not “Just Another Store”

Vector databases solve a fundamentally different problem.

Traditional DB Question

“Find rows where value = X”

Vector DB Question

“Find content similar in meaning to this query”

This enables:

- Semantic search

- Fuzzy reasoning

- Knowledge discovery

📌 Architectural shift:

You are no longer indexing facts.

You are indexing meaning.

That changes:

- Storage strategy

- Index design

- Cost models

🛡️ Section 6: Governance Moves from Static to Dynamic

In traditional systems:

- Data is secured once

- Consumers inherit access

In GenAI:

- Each query is a new data product

New Governance Questions

- Should this user see this paragraph?

- Should this document be included right now?

- Is this context allowed for this use case?

📌 Architectural implication:

Governance must execute inside the retrieval pipeline.

Not before. Not after.

📌 Section 7: What You Should Do Differently — Starting Now

Practical Learning Checklist

-

Map your unstructured data landscape

- Where are your PDFs, emails, wikis?

-

Identify retrieval paths, not just pipelines

- How would an AI agent find relevant context?

-

Decide where vector search belongs

- Separate system or integrated layer?

-

Treat RAG as data architecture, not AI magic

- It’s about data preparation and retrieval

-

Push governance into runtime workflows

- Security trimming at query time, not at rest

If you can’t answer these yet — that’s expected.

That’s why this series exists.

🎯 Final Answer: Is Traditional Data Architecture Dying?

No.

But it was never designed to:

- Serve probabilistic systems

- Assemble context dynamically

- Govern meaning instead of rows

The Data Solution Architect’s role isn’t shrinking.

It’s getting deeper.

➡️ Coming Next

Week 3: 🧩 DSA vs AI Architect vs Enterprise Architect

We’ll define boundaries, overlaps, and career impact — clearly and honestly.

💬 A Data Solution Architect relearning architecture for the GenAI era