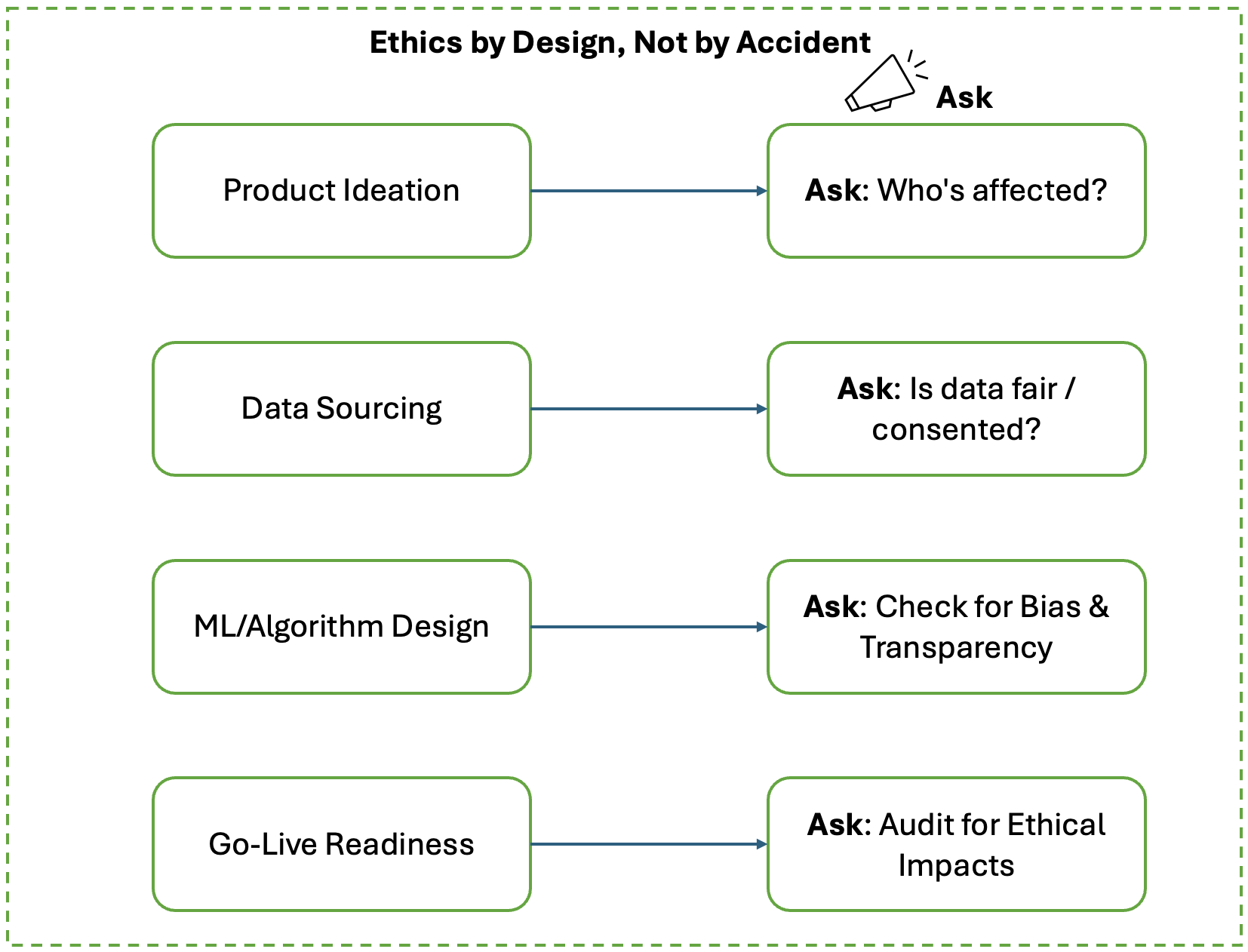

Data Ethics by Design — Not by Accident

📘 What Sparked This Thought

Teams often get excited about AI, analytics, and personalization. Ethics only surfaces when something goes wrong.

What if we flipped this thinking?

What if ethics was part of the design — not a clean-up crew?

💡 My Understanding

Ethical data handling starts where architecture starts:

- At the whiteboard

- In backlog grooming

- During feature prioritization

If we ask upfront:

- Who could this data harm?

- Who benefits? Who doesn’t?

- Are we exposing anyone unnecessarily?

We prevent issues before code is written.

🔍 Real-World Example: The Algorithm That Discriminated

A financial services firm built a model for credit scoring.

It unintentionally disadvantaged a minority demographic due to biased training data.

No one asked about bias at the design stage.

Fixing it later became costly, reputationally and financially.

🔄 Practical Approach

Embed ethics reviews early:

1️⃣ Product ideation → Who’s affected?

2️⃣ Data sourcing → Is this fair, representative, consented?

3️⃣ Algorithm design → Check for bias, transparency.

4️⃣ Go-live readiness → Audit potential impacts.

✅ Key Takeaways

- Ethics should be a design principle, not a patch.

- Prevention is cheaper than ethical recovery.

- Bias hides in data, models, and assumptions.

- Cross-functional conversations build ethical resilience.

🤔 Questions I’m Still Thinking About

- Should every data product have an ethics review checkpoint?

- Can we create reusable frameworks for ethical design?

- How do we educate engineers on ethical red flags?

💬 Final Thoughts

Design with ethics, or rebuild with regret.

Your future customers — and your future legal team — will thank you.